Day 1: Public Safety Tech

Public safety technology plays a crucial role in protecting communities, from preventing incidents to responding more effectively. Inspired by YC’s RFS, I explored how technology can enhance outcomes.

Approach

What I set out to do:

Build a system that can incorporate real-time data, combine it with a natural interface for proactive safety, and incorporate predictive modeling.

Technology used:

Python, JS, FastAPI, OpenAI API, Langchain, Pandas, Google Colab

How I approached the problem:

Use real-time and historical data

Build a natural interface for the user to interact with the system

Let the system proactively alert the user based on the data

Results

The final prototype:

The prototype lets users:

Ask personalized safety questions:

“What’s the risk level on Market Street at 10 PM?”

“Where is the safest place near me right now?”

Receive proactive alerts: Users get notified if they enter high-risk zones

These questions will be answered with live dispatch data and metrics from recent history (last few weeks).

Insights, Build Process & Technical Details

Initially, I wanted to train a model using incident report data that could predict the distribution of risk given a time, day of the week, and date of the year (meaning it learns how these metrics affect crime). I still think this is possible with more data but in the time allocated, I was able to train a model that figures out a rough average of where crime is distributed in San Francisco.

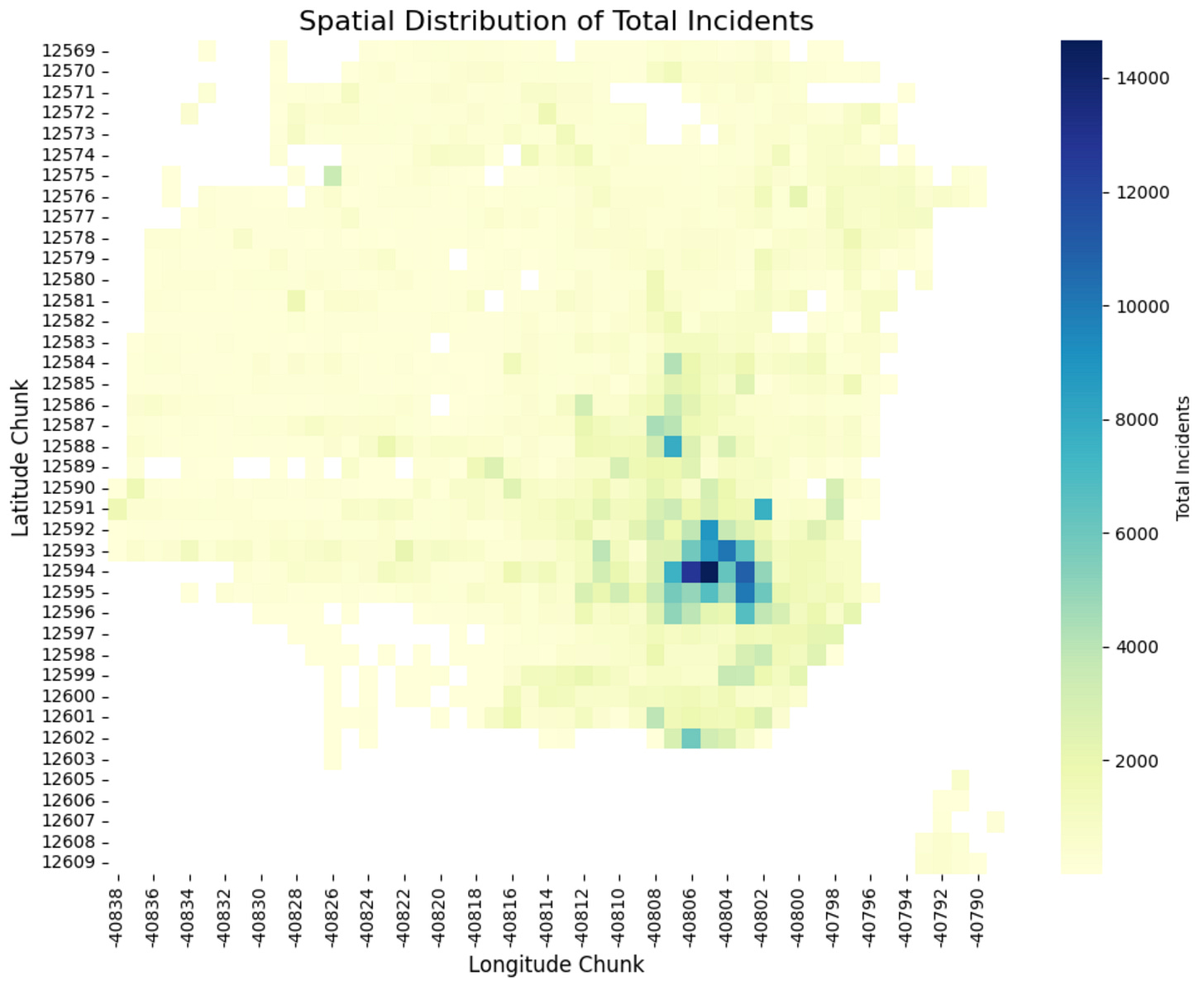

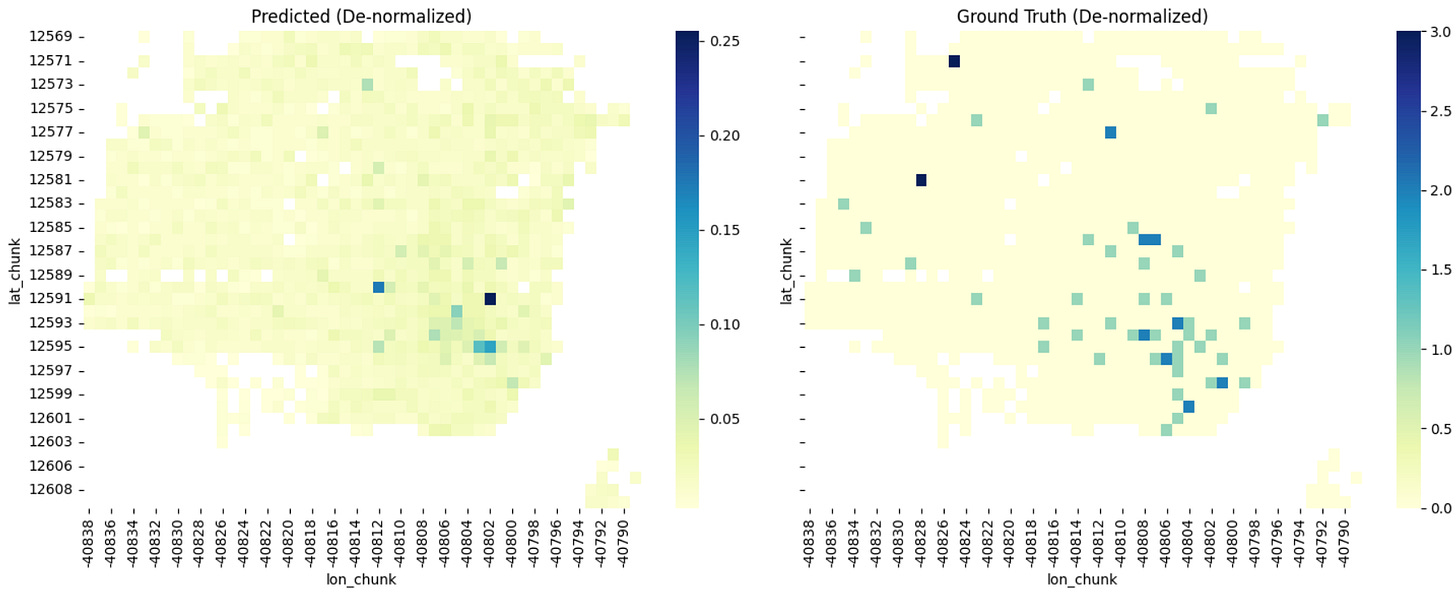

I divided the area into chunks of about 300 x 300 m and time blocks of 3 hours (with a stride of 1 hour for continuity). Then I trained both a DCRNN and transformer ViT-patch-style model to predict a distribution of incidents in a given time block.

The model learned general crime patterns but struggled with time-specific nuances like daily or weekly cycles. I think this can be resolved with multiple resolutions of sinusoidal time embeddings to represent year-scale, week-scale, and day-scale effects along with a better training process and better model hyperparameters.

Final Solution

I wanted to use this model for prediction but I identified a problem that public safety systems can face: if areas are just generically marked as unsafe, there is no hope of recovery. I found the easiest way to combat this was to incorporate recent history and live data.

For recent history, I used a historical incidents dataset from SFData that I found is updated frequently to ~2 days stale. For live data, I used police dispatch reports that are ~10 minutes stale.

For this build, I initially started by clustering crime reports from the past few days and using that data to alert the user of high-risk areas but I found that this leaves out a lot of nuance and detail that we can easily access with a better system.

To incorporate the natural interface I used the GPT-4o-mini model from OpenAI (a production build would probably instead use a fine-tuned Llama). To ground the model in the data that we have, I didn’t want to load context so I gave it access to a suite of tools to access relevant parts of the data.

Reusing chunking and clustering, I allowed the LLM to do a radius & time of day search of events in recent history + dispatch reports. I found that this gives a good balance between not limiting the model’s capabilities and making sure it stays analytical and grounded. I additionally added a place → lat/long search tool since all the data is in that format and the user can then use more natural ways to describe a location they’re talking about.

Using the system prompt, I guided the model to make a plan of analysis, execute that with the tools, and then finally review the data and make a conclusion.

Future Directions

Improvements on this solution:

Fine-tune LLMs to be faster and cheaper at analysis

Incorporate data from local news & possibly social media

Use other types of data such as weather, AQI, lighting, and emergency alerts

Allow it to create its analysis tools to use and find its datasets

Similar to Waze, add user-reported data

Currently, queries are almost 10 cents each. With open-source model fine-tuning this can likely be reduced to fractions of a cent.

Other Ideas:

Can we use video-generating world models fine-tuned on security footage to predict incidents a few seconds ahead of time?

Using all types of data, can models extract non-obvious indicators of future incidents like in finance?

Startups

Create an API that can be used to make:

A better map application that incorporates not only traffic data but safety/risk for routing.

Insights for corporate travel safety and response

Key Takeaways

Real-time data is crucial - the context of an area is always changing. There may be a large music event, a protest, bad weather, etc. All of these insights are useful for getting the best assessment of risk/safety.

Individual-level proactive safety is important - why don’t we have a personal safety assistant watching our backs? With all the data that is available and the parsing/reasoning technology we have available (LLMs), this can be another monotonous task that should be automated.

Bias mitigation is critical - we can’t let over-policing of areas and general stereotypes of specific neighborhoods skew the data for risk. Training on diverse datasets and using natural language to review incident reports helps work in this direction.

AI-driven insights reduce information overload – Data is available in incredible volume. Using AI to analyze this and create simple few-second digestible insights that don’t get in the way of life is important to adoption and integration.

Check out updates on X ➡️ #AdventOfYC | @AshrayGup